Establishing data as a strategic asset is not easy and it depends on a lot of collaboration across an organization. However, once you have a system of record in place for your data, your organization can implement many valuable data governance use cases more easily.

In this post, we’ll highlight the top three most valuable data governance use cases. Each one depends on a data governance and stewardship function already being in place. For example, if you don’t have an approved taxonomy of what data exists in the business, then you can’t adequately tag data as it flows into your lake.

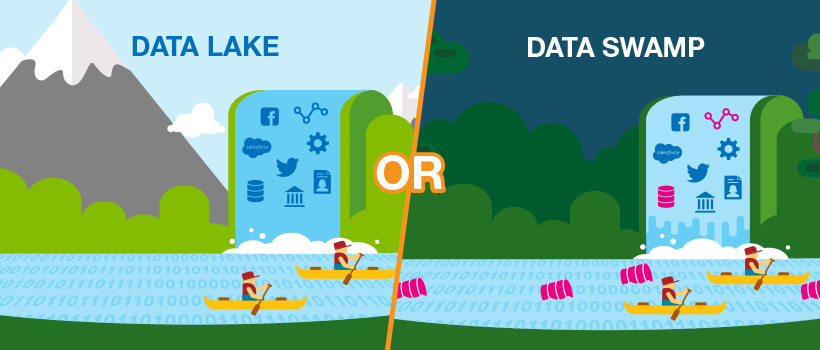

Data lake management: Prevent a data swamp

A data lake is a storage repository that holds a vast amount of raw data in its native format, including structured, semi-structured and unstructured data. The data structure and requirements are not defined until the data is needed.

Oftentimes, organizations interpret the above definition as a reason to dump any data in the lake and let the consumer worry about the rest. But this is how a data lake turns into a data swamp. To avoid a swamp, a data lake needs to be governed, starting from the ingestion of data.

Some key takeaways to think about when managing your data lake are:

- Should this data be centrally stored? Is it better to keep it within the line of business (LOB)? Who does the data belong to?

- Does the data comply with our policies for usage, privacy and security?

- Is the data aligned to our business glossary so people can find and understand what’s in the lake?

- Is the data aligned to a data dictionary?

- Does the data meet data quality requirements?

Once you work through and approve the above questions, the data can go through testing and then into the lake where your business users can find and shop for the data.

Data distribution: Search and shop for data

Imagine the following scenario:

Your company is launching a new product and wants to create a marketing campaign tailored to consumers who are most likely to purchase this new product. These consumers are unique. Creating a program tailored to these consumers’ preferences requires a lot of data. The data is sourced from several places like Adobe’s Analytics and a couple of customer relationship management (CRM) systems (let’s keep it simple).

The search for data begins. It’s not uncommon to spend a month navigating the different LOBs, sifting through technology tools and systems, going back and forth on emails, and sitting in numerous meetings to find and understand what data should – and could – be used.

Make it a data governance use case

But let’s assume your organization has established data as a strategic asset and has put in place a system of record for data. Your team understands that this is a use case for data governance.

In this example, because digital marketing uses Adobe Analytics and CRM data so often, a data sharing agreement is already in place. This is simply an agreement that the digital marketing department can consume the same data that the content marketing department sourced from Adobe Analytics. The agreement details everything from types of feeds that are available, refresh frequency, ownership of data, and probably 50 other attributes. Think of it as a contract and service level agreement (SLA) around expected data.

The first thing one of the digital marketers would do is check the data catalog, which inventories and organizes all of an organization’s data assets so data consumers can easily discover, understand, trust and access data for any analytical or business purpose. Without governance, a data catalog can have duplicate and redundant datasets, which is inefficient and costly. The marketers, and all data consumers, expect to find the data that is fit for purpose. With a data catalog underlaid with data governance, the marketers can be confident about the relevance and value of the datasets because the governance brings context to the data. They can

- See if the data is tied to any business terms

- Understand where the data came from

- See if it affects any other datasets

- Verify its quality

- Sample the data

- View the history on how other teammates have used the data

When the marketers find the right feed or dataset, they can then add the assets to their shopping cart and request access. While requesting access, the marketer first needs to fill out a form where they provide the desired format the data should be delivered, data sharing agreements, the purpose of the request, how long the data is needed for, and more.

Depending on the level of integration, distribution of the data can mean a task being sent to the data custodian to provide access or a fully automated permission change and connection to the data is provided.

In this data shopping use case, data governance works as an accelerator (the search for data only takes an hour instead of a month) and an insurance policy (shopping for the right data is assured with the business context in the data catalog).

Report certification: Reduce your report stack by 80%

If you’ve created a great data asset that will be used across the enterprise, then why not prove that it is fit for purpose? Why risk stepping into a meeting where someone has a similar report with different numbers? Why let a different unit waste time and recreate the same report because they weren’t sure what data was used? These questions are all resolved in the classic data governance use case of report certification.

The basic components of report certification:

- Do we have an owner for this asset?

- Can she or her delegate help identify the critical data elements?

- Can the data be traced to its source?

- Do we have standards in place, such as data quality rules?

- Can we prove that the standards have been applied and can they be measured at the different hops as the data flows through the organization?

Just like an auditor would stamp a report as correct, a data authority can do the same with a data asset to show that the asset is trustworthy. This is not only a good way to show the value of the asset, but also a great way to weed out reports (or other assets) that are redundant, out of date, or just wrong.

So as you can see, expanding your governance program to include data governance use cases such as cleaning the data swamp, searching and shopping for data, and reducing your report stack can help you boost ROI and show the real value of governance to the business. These use cases are crucial to achieving digital transformation across your enterprise.