What is data quality and why is it important?

Picture this: Your marketing team just launched what should be the most successful campaign of the year. The creative is pixel-perfect. The offer is irresistible. But 30% of your emails bounce because of incorrect addresses. Another 15% go to the wrong segments entirely.

Meanwhile, your data science team has spent three months building an AI model that’s supposed to predict customer churn. When they deploy it, the results are all over the map; the training data was riddled with duplicates and missing values.

This isn’t a hypothetical story. Even today, more than half of data professionals admit they don’t trust their own data. With AI dramatically raising the stakes, that trust gap is becoming a chasm that separates companies that can accelerate their data initiatives from those that remain stuck in endless data clean-up.

The truth? Data quality isn’t a technical issue—it’s a business imperative that’s directly tied to your ability to compete. Every incorrect record, every duplicate entry, every inconsistent field is quietly eroding your revenue, inflating your costs and creative avoidable risks.

What are the benefits of data quality?

Maintaining good data quality helps organizations make better decisions and use their resources more efficiently. It helps companies offer better customer service and maintain compliance with industry regulations. Inconsistent, incomplete or outdated information costs organizations a lot of time and money. Data quality tools can help eliminate many problems caused by bad data.

High-quality data can guide fast, effective data-driven decision-making and reduce some of the liability associated with working with outdated, incomplete or poorly-organized data sets.

What defines good data quality?

Data quality solutions tend to focus on a few key areas. Namely, that the data is complete and accurate, there are no duplicate records and the data is consistent across all databases. This is in terms of the format of the information and the data itself. Good quality data should meet those criteria and also be timely enough to be useful.

Data quality vs. data integrity

There's some overlap between the definition of data quality and data integrity. Data quality is a subset of data integrity that focuses on whether the data is complete and valid. Data integrity goes a step further and considers whether the data is useful in its current form. For example, data quality solutions that also cover data integrity may take into account:

- Integration: Is the database or warehouse integrated into your existing systems in a way that offers seamless and timely visibility?

- Observability: Do you have a way of getting alerts about anomalous data, outliers and other issues that might highlight a problem with your data?

- Governance: What policies do you have in place to track the meaning, lineage and impact of your data?

- Enrichment: Can you add context or meaning to internal data by combining it with information from appropriate third-party sources?

Data observability tools and quality solutions can help organizations improve the quality and integrity of their data, turning it into a truly useful strategic asset.

Why is data quality more important now than ever before?

As technology evolves, new challenges to data quality emerge, requiring more advanced tactics. There are many reasons to prioritize data quality solutions.

Driving revenue growth

Your marketing team can’t hit targets with inaccurate customer data. Your sales team can’t close deals when prospect information is wrong. And your executive team can’t make sound decisions with flawed analytics.

Example: A retail company sends personalized promotional emails to its customers. If the customer database contains duplicate or incorrect email addresses, engagement rates drop and the company loses potential sales. By ensuring accurate and complete customer data, businesses can improve targeting, increase conversion rates and boost revenue.

Controlling costs

Poor data quality leads to inefficiencies, unnecessary expenses, and wasted resources. Organizations spend millions cleaning and reconciling incorrect data, often leading to additional operational costs.

Example: A manufacturing company relies on supplier data to manage inventory. If the data is incorrect, it may over-order raw materials, leading to excess storage costs, or under-order, causing production delays. Ensuring data accuracy and consistency prevents such costly mistakes and optimizes resource allocation.

Mitigating risk and ensuring compliance

Today’s regulatory environment is complex and rapidly evolving. With AI amplifying both opportunities and risks, the stakes for data quality have never been higher.

Example: A financial institution must comply with Anti-Money Laundering (AML) regulations. If customer transaction data is incomplete or inconsistent, fraudulent activities may go undetected, exposing the company to fines and legal repercussions. High data integrity ensures compliance and protects against financial and reputational risks.

The six key dimensions of data quality

The most successful organizations measure and improve data quality across these six key dimensions:

- Completeness

- Accuracy

- Consistency

- Validity

- Uniqueness

- Integrity

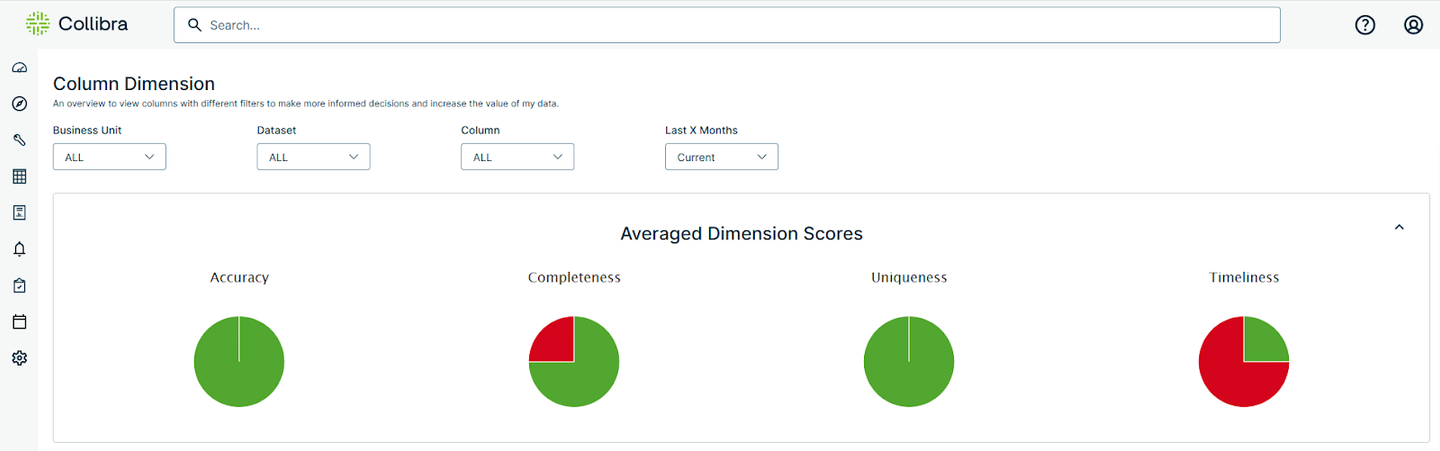

Data quality dimension scores

Completeness

Ensures that all required data attributes are present. Missing values can impact decision-making and analytics.

Example: A customer record missing a phone number may prevent effective follow-up for support or marketing.

Accuracy

Measures how well data reflects real-world entities and events. Inaccurate data can lead to incorrect analysis and poor business decisions.

Example: Incorrect financial transaction data could misrepresent a company’s revenue and lead to faulty financial reports.

Consistency

Ensures that data remains uniform across multiple systems and records. Inconsistent data can create confusion and errors.

Example: A CRM system lists a customer’s birthdate as June 1, while the billing system records it as May 1. This inconsistency can cause verification failures.

Validity

Ensures that data adheres to required formats, rules, or constraints. Invalid data can cause operational errors.

Example: A ZIP code field must contain only numeric values; if a non-numeric character appears, the data is invalid and cannot be processed correctly.

Uniqueness

Prevents duplicate records, ensuring a single version of truth. Duplicate data can inflate costs and distort analysis.

Example: A bank has multiple records for the same customer, leading to redundant marketing efforts and duplicate credit risk assessments.

Integrity

Ensures that data relationships remain intact and connected across systems. Broken relationships can lead to incomplete insights.

Example: A healthcare system records a patient’s treatment details separately from their primary medical record, making it difficult for doctors to get a complete history.

Breaking through fragmented governance

When data is fragmented across multiple clouds, applications and on-prem systems, maintaining data quality is challenging. When visibility, access and policies are disjointed across your data ecosystem, quality suffers.

The solution isn’t more spreadsheets or manual processes. Today’s organizations need automated, unified governance for data and AI that works across every data source, system and user.

How Collibra Data Quality and Observability delivers data confidence

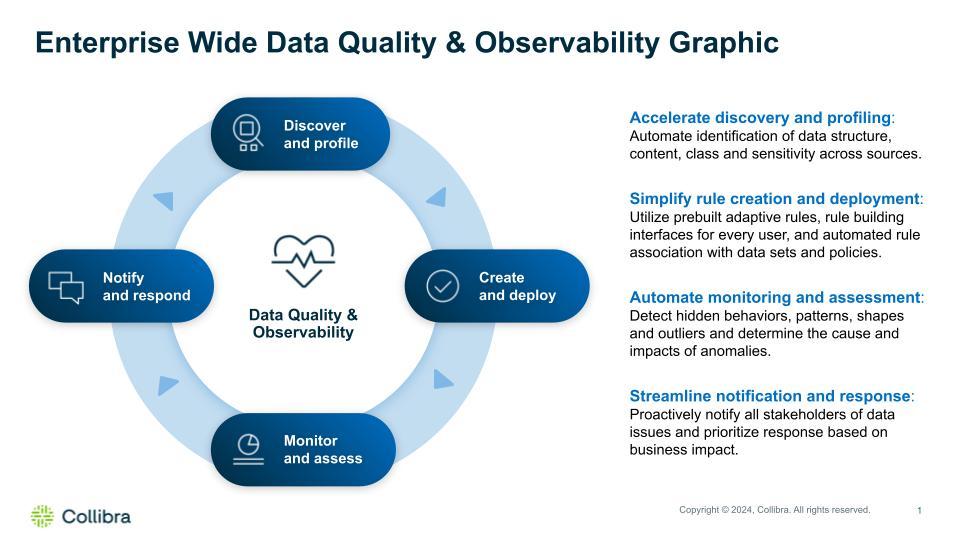

Managing data quality with a continuous cycle

Accelerate discovery and profiling: Automate identification of data structure, content, class and sensitivity across sources.

Simplify rule creation and deployment: Utilize prebuilt adaptive rules, rule building interfaces for every user, and automated rule association with data sets and policies.

Automate monitoring and assessment: Detect hidden behaviors, patterns, shapes and outliers and determine the cause and impacts of anomalies.

Streamline notification and response: Proactively notify all stakeholders of data issues and prioritize response based on business impact.

See it in action with our product tour.

Final thoughts

High-quality data isn’t’ a nice-to-have. It’s a strategic asset that drives better decision-making, improves operational efficiency and reduces business risk. By focusing on key dimensions of data quality and implementing unified governance across your entire data ecosystem, you can transform data from a liability into your most important asset.

The organizations that can unify governance across every data source, use case and user will know they’re using reliable, high-quality data. And that they can accelerate every data and AI use case.

That’s Data Confidence®.

Is your business leveraging high-quality data for success? We can help you become a data quality expert. Check out our workbook.

In this post:

- What are the benefits of data quality?

- What defines good data quality?

- Data quality vs. data integrity

- Why is data quality more important now than ever before?

- The six key dimensions of data quality

- Breaking through fragmented governance

- How Collibra Data Quality and Observability delivers data confidence

- Final thoughts

Related articles

Data QualitySeptember 12, 2024

What is data observability and why is it important?

AIJuly 15, 2024

How to observe data quality for better, more reliable AI

Data QualityNovember 8, 2024

Announcing Data Quality & Observability with Pushdown for SAP HANA, HANA Cloud and Datasphere

Data QualityNovember 16, 2023

The data quality rule of 1%: how to size for success

Keep up with the latest from Collibra

I would like to get updates about the latest Collibra content, events and more.

Thanks for signing up

You'll begin receiving educational materials and invitations to network with our community soon.