Don’t wreck your data lake with poor quality data

As cloud data storage and advanced analytics become the norm, the quality of data gets critical, especially when you need your data lake to power trusted analytical results.

The reality is poor data quality can lead to bad decisions and financial losses. To make sure you don’t wreck your data lake with poor data quality, this blog will explore:

- The causes of poor data quality

- The impact of poor data quality on businesses, with examples

- Solutions for improving data quality in your organization

- The importance of active metadata management and data governance

- The benefits of well-managed data lakes for analytics and trust

Join us as we delve into this important topic and learn how to improve the quality of your data.

Download our free workbook and learn how to implement a data quality and observability solution with Collibra.

What causes poor data quality?

Unfortunately, there is a lot of opportunity to introduce poor data quality into your data pipelines. Here are a few of the most common causes:

- Data entry errors

- Incomplete information

- Missing information

- Duplication

- Inconsistency

Other factors contributing to poor data quality include insufficient data validation processes, incompatible data formats, and inadequate data governance policies.

As data volumes grow and more sources are integrated, data quality will only become increasingly important — and challenging for data professionals — to manage.

The good news is data lakes can provide a solution for businesses looking to improve data quality. By centralizing and storing data in a single location, data lakes can help ensure data consistency and accuracy. And by implementing rigorous data governance policies and processes, organizations can more effectively build an environment of high-quality, trusted data over time.

How poor data quality can lead to bad decisions

Gartner estimates that poor data quality hurts to the tune of $15 million as the average annual financial cost. Besides the immediate compliance risk, the real long-term business impact extends to disengaged customers, missed opportunities, brand value erosion, and bad business decisions.

The cost of poor data quality is not always measurable. For example, bad decisions based on incomplete or wrong data can result in delayed medical treatment. In one report, mismatched patient data was identified as the third leading cause of preventable death in the United States.

Business decisions made through the use of inaccurate data can affect your strategic planning and long-term outlook. It can also hurt your workforce if employee wellbeing and the hiring strategies are out of tune due to unreliable data.

As organizations increasingly use machine learning, the role of data quality in automated decisions also becomes prominent. A recent Harvard Business Review article highlights that the quality demands of machine learning are steep. And bad data can rear its ugly head twice, both in the historical data used to train the predictive model and in the new data used by that model to make future decisions.

Are you experiencing any of the seven most common data quality issues?

- Duplicate data

- Inaccurate data

- Ambiguous data

- Hidden data

- Inconsistent data

- Too much data

- Data downtime

Why data lakes suffer from quality issues

A data lake acts as a central repository of data. The data can be unstructured and processed as required. Data stored in data lakes can be images, videos, server logs, social media posts, and data from IoT devices. They do not need a fully defined data structure.

Because of their flexibility, data lakes are much more cost-effective. They also deliver unprecedented scale and speed. But these advantages can turn data lakes quickly into data swamps.

For example, unstructured data needs rapid processing to feed the analytical tools. Or choosing the relevant data requires insights into the data lineage. Without governance or proper data management, various data quality issues can defeat the purpose of data lakes.

So why do these issues develop in data lakes? There are quite a few reasons.

- Operational inaccuracies: Consider that data is entered incorrectly in one enterprise application. When it reaches the data lake, it can impact all the downstream operations. Such operational inaccuracies need immediate corrections for reliable processing. Simple data cleansing is not enough for these errors. They need upstream remediation to save other applications from getting affected.

- Integration-level inaccuracies: Data lakes are good for integrating enterprise data silos. However, the integration process itself can introduce inconsistencies across the data attributes. These errors need extensive remediation after integration.

- Diverse data sources: Data comes to data lakes from different sources. These sources may have different formats, redundancies, and inconsistencies. If not addressed in time, they can affect the quality of data lakes.

- Compliance issues: Regulatory privacy compliance needs controlled access to sensitive data. When massive quantities of data arrive in data lakes, identifying and managing access to sensitive data can get complicated.

- Real-time access and updates: Specific data being used and updated at the same time can create errors. These errors can go unnoticed, affecting the data lake quality.

How to improve the poor data quality of your data lake

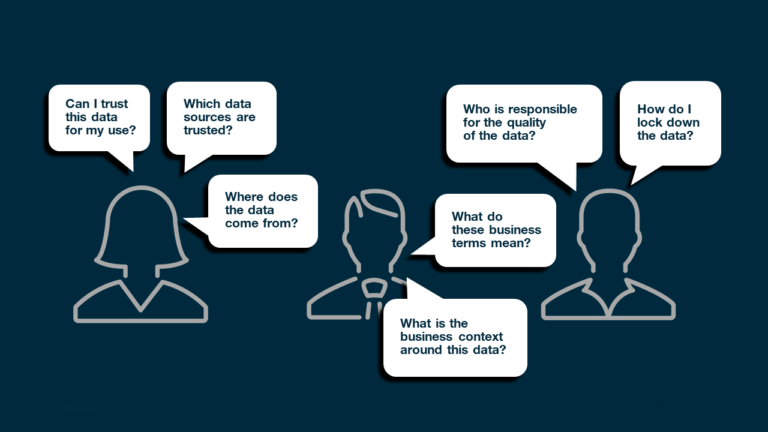

We think data is objective, but it is actually as interpretable as Shakespeare. Unstructured or raw data is open to interpretation, often the wrong interpretation. Detecting semantic anomalies is therefore critical to understanding data and its context correctly. Active data management and data governance effectively tackle these types of issues.

- Active metadata management: Active metadata graphs connect metadata of diverse information assets, adding rich context to your data. ML-driven active metadata management drives a better understanding of data, essential for high-quality data.

- Data Governance: Data governance is a collection of practices and processes to standardize the management of enterprise data assets. A robust data governance foundation along with data intelligence promotes trust in your data lake.

Streamlining the processes with data governance enhances trust in data. For example, clearly defined roles and ownerships help quickly address the data quality issues. With automated initiation of remediation workflows, the issues reach the right data owners.

Well-managed data lakes offer universal and secure access to relevant data. They support a deeper understanding of data with catalog and indexing. You can augment them with automation and self-service to enable everyone in your organization to contribute to data quality.

How continuous data quality makes your data lake pristine

Several factors influence the quality of data lakes. If semantic anomalies affect the relevance, statistical anomalies affect the accuracy of data.

Dimensions of data quality, such as completeness, validity, consistency, or integrity, contribute to the data accuracy. Measuring these dimensions is integral to data quality assessments. When you measure data quality, you get a better handle on improving it.

Organizations focus on finding and fixing statistical anomalies as soon as data reach the data lake. They have been using manual data quality rules for a long time to detect data errors. However, the overwhelming volume and speed of data now make it impossible to manage with manual rules.

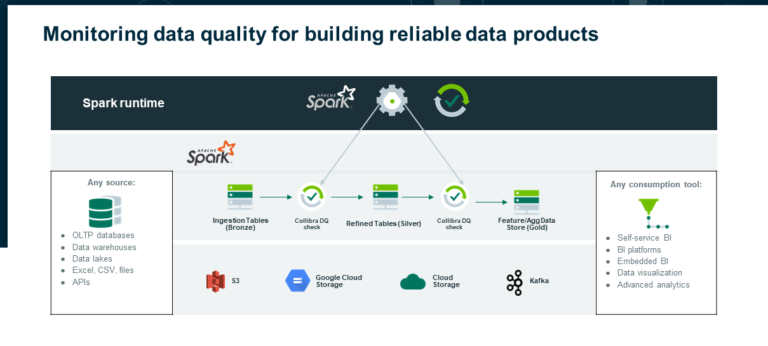

ML-driven auto-discovered data quality rules reduce the dependency on manual rule writing and management. These adaptive rules learn continuously to improve the quality of your data lake. Constant monitoring and quick alerts to the right data owners can remediate data quality issues efficiently.

A predictive, scalable data quality solution can help you unify your lake across diverse data sources, with constant data monitoring. A sophisticated solution that uses ML to discover and improve data quality rules detects quality issues in real-time. You can then proactively mitigate data quality risks to deliver trusted data for better business decisions.

With a top-tier comprehensive data quality solution, you can always:

- Focus on business-critical data for maximum impact.

- Continuously monitor incoming data for early detection of anomalies.

- Leverage support for remediation workflows with the right data owners.

- Easily reconcile with upstream data sources to address quality issues at the source.

- Automatically discover personal and sensitive data for compliant access.

- Leverage self-service data quality to eliminate IT dependency and improve response time.

Continuous data quality unlocks the value of your data lake and helps you get the most out of your enterprise data. It empowers you to run advanced analytics in the cloud without moving data, delivering real-time insights and ML-driven predictions.

Why data observability is critical for data lakes

Data observability focuses on the health of the entire data chain connected with the data lake. It is a set of tools to actively observe data and maintain its health. Using multiple features, it diagnoses problems early before they affect the downstream operations.

As data ecosystems become more complex, data quality issues can quickly spread to bring the system down like dominos. Organizations cannot afford long data downtimes while responding to the constantly changing business scenarios. Data lakes feeding the downstream applications are especially vulnerable to such situations as they offer universal access to all enterprise users. Data observability is the defining feature that anchors the data to business context and data quality, preventing massive data outages.

Data observability may look like data monitoring, but it actually precedes monitoring. It leverages metadata to understand the context and provides inputs to data monitoring. It also uses data lineage to get insights into governance practices.

Data monitoring takes these inputs and works with a defined set of metrics. It visualizes and alerts potential issues. Both work together to ensure that the data pipelines are clean and high-quality to power trusted analytics.

Data Observability is now considered a critical tool in DataOps, essential for maintaining the health of data lakes. It provides support for collecting pipeline events and correlating them. Then it identifies and examines anomalies or spikes for immediate action.

Data lakes are rapidly becoming a crucial factor in the organizational cloud strategy, enabling robust data pipelines to all enterprise applications. With the right data quality and observability tools, you can ensure that your data lake doesn’t get bogged down with poor quality data. And you can continue to make better business decisions driven by trusted real-time analytics.

Find out your 3-year TCO & ROI of Collibra’s data quality and observability solution

+++

In this post:

Related articles

Data QualitySeptember 12, 2024

What is data observability and why is it important?

AIJuly 15, 2024

How to observe data quality for better, more reliable AI

Data QualityNovember 8, 2024

Announcing Data Quality & Observability with Pushdown for SAP HANA, HANA Cloud and Datasphere

Data QualityNovember 16, 2023

The data quality rule of 1%: how to size for success

Keep up with the latest from Collibra

I would like to get updates about the latest Collibra content, events and more.

Thanks for signing up

You'll begin receiving educational materials and invitations to network with our community soon.