What is data observability and why is it important?

Data observability enables organizations to monitor, validate, and report on the health of their data in applications, data warehouses and lakes, AI and analytics systems, and data pipelines. Data observability has grown in popularity because modern data architectures have become too complex and dynamic for static monitoring methods to quickly and effectively identify issues, notify stakeholders, and initiate incident management. Using AI and machine learning, data observability solutions improve the reliability of data by increasing the coverage of data quality monitoring and providing a comprehensive view of data health across the organization.

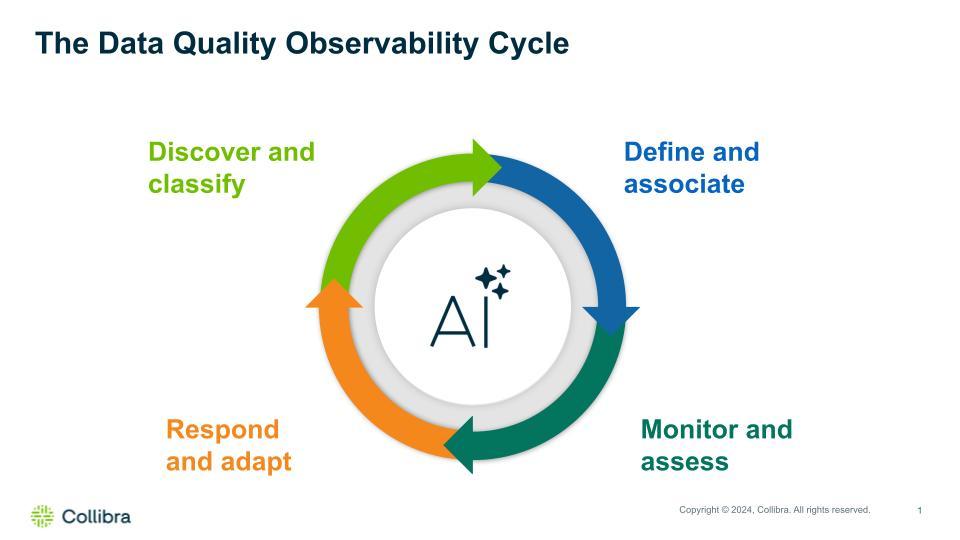

The ‘Data Quality Observability Cycle’

At the heart of effective data observability is the Data Quality Observability Cycle, a continuous process that ensures your data is accurate, complete, timely and consistent for AI and other critical applications.

This cycle consists of four key stages:

- Discover and classify: Examine metadata to identify data classes and profile data for statistics on the shape of the dataset to understand the structure and quality of your data

- Define and associate: Create policies and rules to validate data quality and associate them with relevant datasets to ensure that data remains consistent and trustworthy

- Monitor and assess: Continuously detect genuine anomalies, understand blind spots, and triage any issues that arise to prevent data quality issues from escalating

- Respond and adapt: When issues are detected, respond with an incident management workflow to quickly adapt to changing conditions and ensure the health of your data

The exponential growth of data sources and the volume of data in those sources creates silos of inaccurate, incomplete and inconsistent data. And changes to data sources, whether intentional or unintentional, break data pipelines feeding downstream AI and analytics applications. The rise in the volume and frequency of change in data has become overwhelming for data teams to manage without automation and intelligence.

To successfully implement data observability, organizations should look for the following critical data observability features that help automate and streamline data quality monitoring, validation, and reporting:

- Real-time monitoring: Ability to monitor data health and pipeline performance in real-time, ensuring immediate detection and notification of issues

- Adaptive quality rules: Machine learning adjusts quality thresholds based on normal data distributions.

- Automated anomaly detection: Ability to find missing values and records, schema changes, null values, and duplicates and proactively notify stakeholders

- Root cause and lineage analysis: Ability to identify causes of issues and the downstream impact on AI and analytics

- Compliance tracking: Ability to link quality rules to data policies and demonstrate compliance with internal standards and external regulations

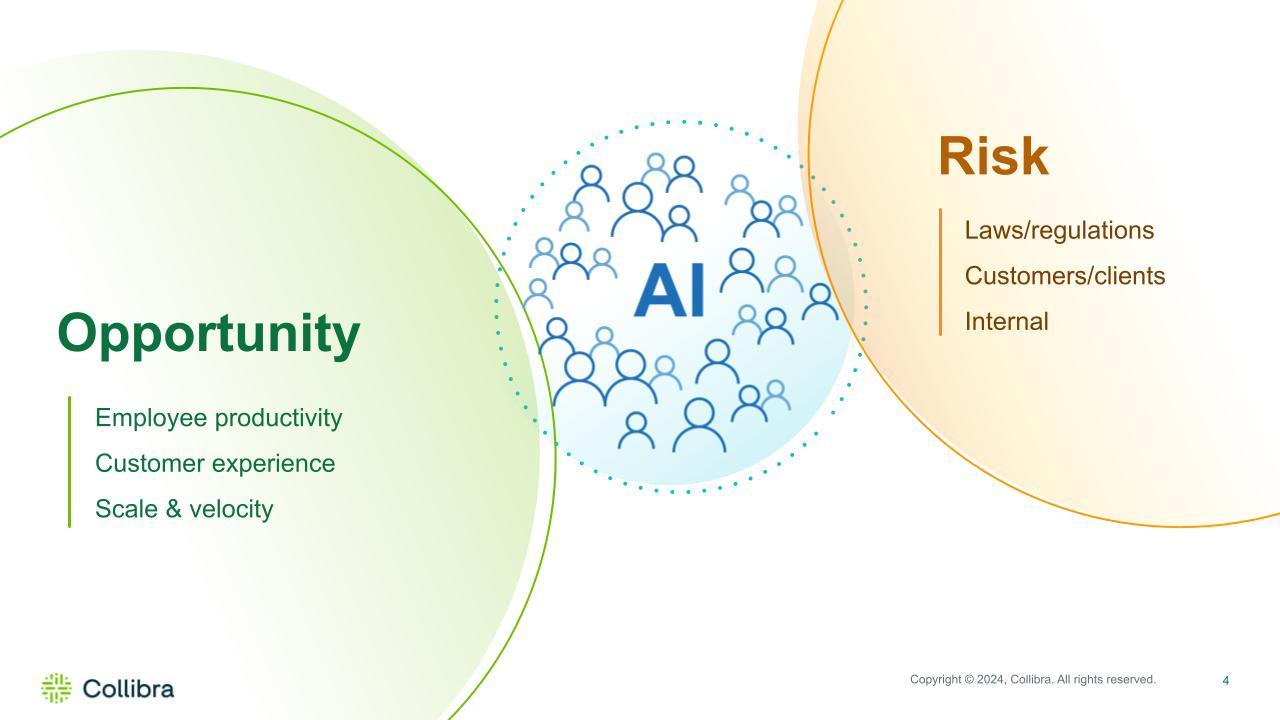

Why Data Quality and Observability is needed in the AI era

Despite two-thirds of CEOs identifying potential errors as the top risk to AI adoption1, only a third of Chief Data and Analytics Officers say their efforts to improve data quality have been successful.2 With the right tools and processes in place, organizations can ensure that their AI models are built on a foundation of high-quality, trustworthy data, driving better outcomes and maintaining compliance in an increasingly complex landscape.

Collibra’s Data Quality & Observability offers the following benefits:

- Monitor data pipelines to detect anomalies, ensuring that any potential issues are flagged early before they can impact AI and analytics.

- Validate data quality and test the integrity of datasets, ensuring that only the most reliable data is used in AI training and decision-making.

- Visualize data health and meet compliance requirements and maintain transparency.

Learn more in our workbook, “Getting to data quality: Data reliability in the AI age.”

***

1 https://www.cfodive.com/news/67-ceos-see-flawed-data-top-ai-risk-workday-tech-infotech/694013/

2 https://wwa.wavestone.com/app/uploads/2023/12/DataAI-ExecutiveLeadershipSurveyFinalAsset.pdf

In this post:

Related articles

AIJuly 15, 2024

How to observe data quality for better, more reliable AI

Data QualityNovember 8, 2024

Announcing Data Quality & Observability with Pushdown for SAP HANA, HANA Cloud and Datasphere

Data QualityNovember 16, 2023

The data quality rule of 1%: how to size for success

Data QualityJanuary 18, 2023

Data Observability: Embracing Observability into DataOps

Keep up with the latest from Collibra

I would like to get updates about the latest Collibra content, events and more.

Thanks for signing up

You'll begin receiving educational materials and invitations to network with our community soon.