Scale and simplify data quality operations with pullup processing

There's a massive disconnect in the world of data, and it’s probably happening in your organization right now. Only 44% of people trust the data they use for business reporting (IDC). This persistent lack of trust directly translates to high organizational costs, as poor decision making costs between $5 million and $25 million annually (Forrester). Data sources that lack native processing compatibility become immediate data quality blind spots, creating unacceptable risk. To truly achieve reliable data across all systems, we must offer flexible processing options that close critical data quality gaps.

What’s new: Pullup support

We are proud to announce the launch of pullup processing. This crucial capability overcomes a significant barrier in enterprise-wide data quality management. Previously, Data Quality & Observability (Cloud) relied solely on pushdown processing, where data checks run natively on the source system. However, many vital data sources lack the necessary compatibility for this native execution.

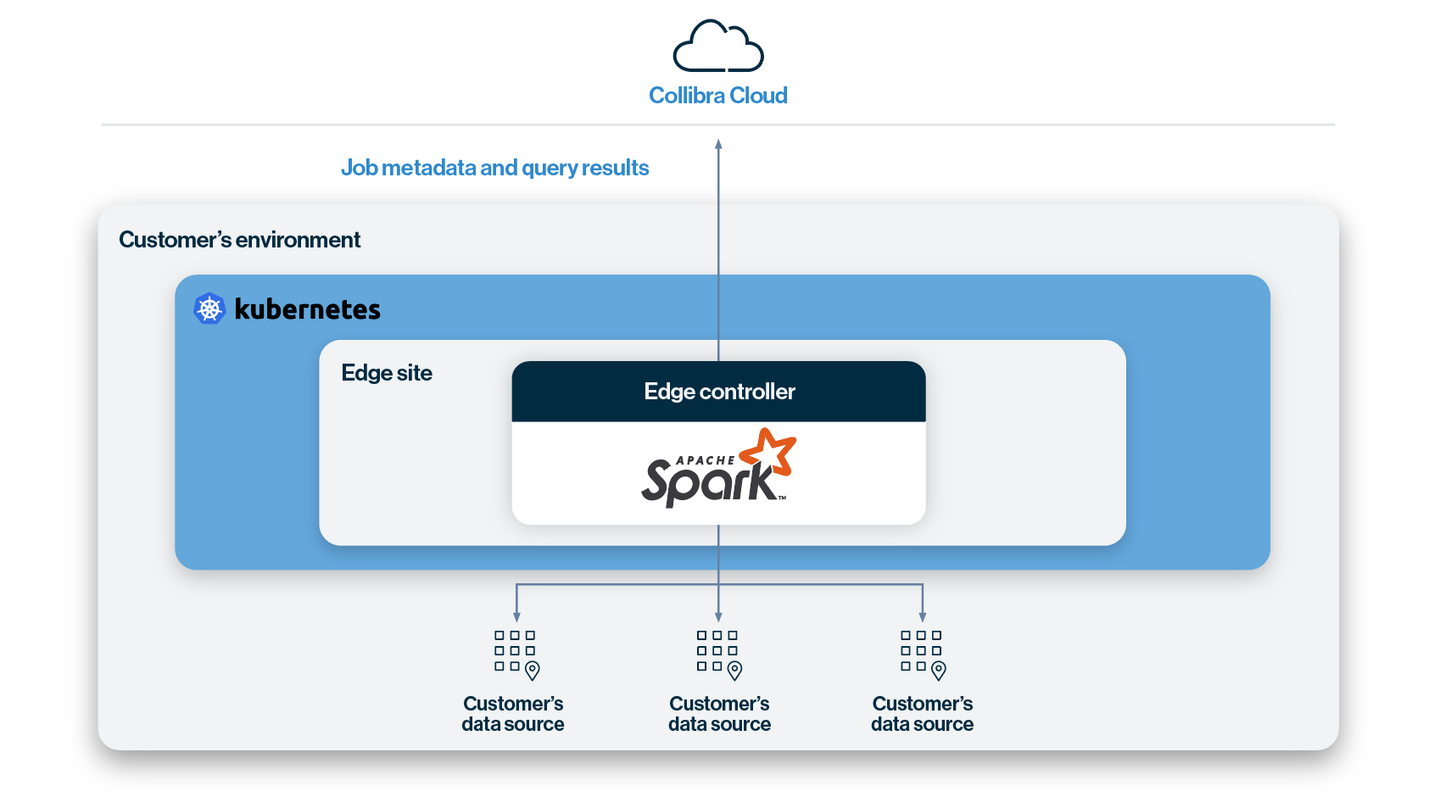

Pullup processing resolves this issue by configuring DQ jobs to execute on a dedicated Spark engine. This mechanism requires the data to be moved ("pulled up") to the Spark engine, which is hosted via the Edge agent, for processing. This addition dramatically expands the data quality coverage, providing the processing flexibility required for total data reliability.

How pullup support helps

Relying on pushdown-only processing creates unavoidable governance blind spots, forcing data quality specialists to accept unreliable data from crucial but incompatible sources. This friction undermines the goal of enterprise-wide quality. Pullup processing directly addresses these operational hurdles by introducing flexible job execution and enhanced control via the Edge platform.

This capability solves critical pain points by enabling you to:

• Extend your data quality coverage to more data sources

• Enable data quality job execution on a dedicated Spark engine

• Allow tuning of resource allocation for processing intensive jobs

• Facilitate onboarding and adoption across Collibra tools

How pullup works

Pullup processing is defined by the necessity of moving the data from the source system to a dedicated Spark engine where the Data Quality (DQ) job is executed.

An Edge Administrator or Data Quality Manager first configures the necessary connection within the Edge settings area to specifically include the pullup capability. When configuring a DQ job using a pullup-enabled connection, users gain access to a configuration screen specifically for Spark settings. This configuration allows the Data Quality Manager to tune the job, providing control over computational resources by setting parameters such as executors, memory, driver memory, and partition number. Once configured, the system allows the simultaneous submission of multiple jobs into a queue, and the underlying infrastructure intelligently manages the Spark engine resources to determine the maximum level of parallelization.

Users can view real-time job status updates on the job page and within the Settings-Edge-Jobs window. It is important to note that standard DQ jobs, Adaptive Rules (AR) and Profiling are supported in this release, while layers like Custom Rules and break record storage will be added later on.

Architecture diagram of the pullup connection

Why you should be excited

Pullup support maximizes the governance potential of your Collibra Platform by ensuring comprehensive coverage across your heterogeneous data landscape. Here’s how pullup support benefits key personas across your data team:

- Data Quality Manager (DQM): Gains comprehensive coverage by running DQ jobs against sources previously inaccessible due to pushdown-only limitations

- Platform Administrator: Tune resource allocation (executors, memory, etc.) for processing intensive jobs via the Spark configuration screen. Reduces operational complexity and management overhead by leveraging the SaaS model with the self-hosted Edge agent

Key use cases

Pullup support helps data quality managers and platform administrators solve for two key use cases:

- Scaling concurrent data quality checks: A DQ team needs to run 20 simultaneous jobs on pullup-enabled connections. They submit these multiple jobs into a queue, and the system efficiently manages the Spark resources to determine the maximum level of parallelization, ensuring rapid execution of the checks

- Optimizing resource allocation for large profiling jobs: When creating a computationally heavy profiling job, a data engineer accesses the Spark configuration screen during setup. They explicitly allocate specific memory and executor settings to ensure the high-volume job completes within defined performance metrics (SLAs) without consuming excess resources

Key takeaways about pullup support

This initiative significantly improves data quality operations by overcoming previous processing limitations, allowing the platform to ingest and govern a larger volume of diverse data. It reduces complexity in resource allocation, streamlines operations, and enables direct job execution within the unified platform, simplifying data processing and ensuring greater consistency and control for more robust organizational outcomes.

Join Collibra’s Product Premiere to learn:

- The latest platform innovations in our October release

- How Collibra is building on our commitment to reduce complexity and streamline operations with pullup support

- How to get started

Keep up with the latest from Collibra

I would like to get updates about the latest Collibra content, events and more.

Thanks for signing up

You'll begin receiving educational materials and invitations to network with our community soon.