Enabling governed AI everywhere with Collibra model context protocol server

2025 surveys show broad AI experimentation with limited business impact. McKinsey finds more than 80% of companies report no material contribution to earnings and less than 10% of use cases make it past pilot. MIT findings estimate ~5% of pilots reach production with measurable business results. The key to delivering value with AI agents is integration with existing IT systems and processes and ensuring business context. Model context protocol helps address both of these issues.

What’s new: Collibra model context protocol server

Model context protocol (MCP) is an open-source protocol that simplifies how applications exchange information with large language models (LLMs). It provides a consistent framework for models to interact with data (resources), interactive templates (prompts) and actionable functions (tools). MCP essentially acts as a standard language for AI agents to talk with your data, resources and tools.

The Collibra model context protocol server allows AI models and agents to access and engage with governed metadata and business context information in the Collibra Platform. This enables copilots and chatbots to interact with Collibra from anywhere and simplifies the integration of Collibra with agents built on LLMs and connected to other agents in complex workflows.

How Collibra model context protocol server helps

The Collibra model context protocol server solves three key challenges for AI developers and users when building and deploying models: inconsistent or low-quality context retrieval for LLMs, fragmented integration workflows and a lack of standardized communication between Collibra and other sources of information. By providing access to centralized and governed metadata and contextual information, the MCP server supports the reliability, accuracy and scalability of AI systems, especially in dynamic and complex environments.

Collibra model context protocol solves for:

- Inconsistent, low-quality or incomplete contextual retrieval in AI pipelines

- Complex, manual integration in AI deployments

- Switching between tools to access governance context in AI workflows

- Limited confidence and trust in AI output

How Collibra model context protocol server works

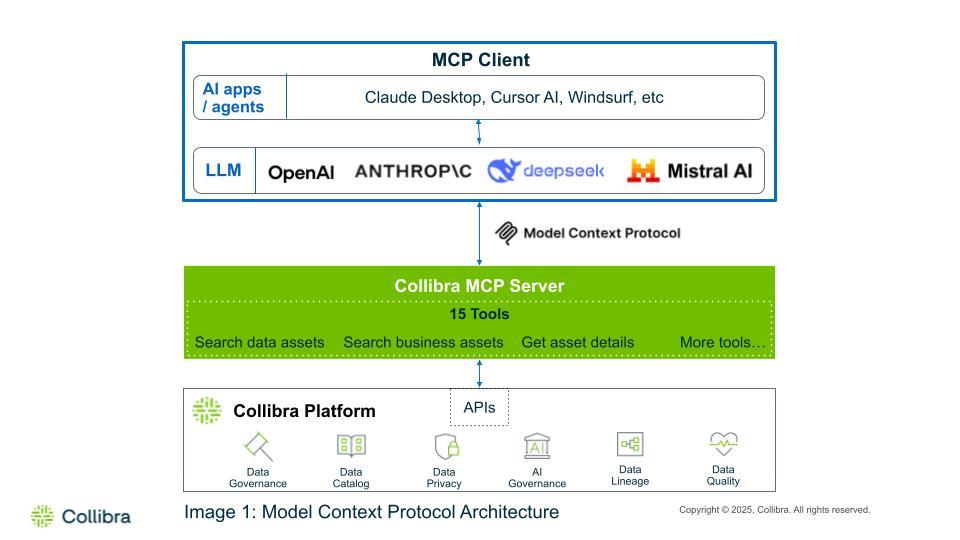

The MCP server uses a client-server architecture.

- MCP client: The component of the AI application or agent that uses a Large Language Model (LLM) to perform tasks

- MCP server: The server that provides specific functionality and processes requests from MCP clients

A typical communication flow includes the following steps:

- Initialization: The MCP client and server establish a connection and agree on the protocol version

- Message exchange: The client (LLM) sends requests to the server for information or actions. The server responds with results or notifications, and errors are sent if communication fails

- Termination: Either the client or the server can end the connection

The communication flow consists of two key elements:

- Data Layer: Defines what is communicated. It uses JSON-RPC 2.0 to specify:

- The structure of messages, including requests, responses and errors

- How to manage the connection lifecycle between a client and a server, such as initialization and capability negotiation

- The core interaction primitives, including how "tools," "resources" and "prompts" are represented and communicated

- Transport Layer: Manages how the communication occurs. It handles the mechanics of sending and receiving the data defined by the Data Layer, including:

- The underlying communication mechanisms, such as STDIO for local processes or HTTP/Server-Sent Events for network communication

- Establishing and managing connections

- Framing messages for transmission

- Handling security aspects, such as authorization

Model context protocol architecture

Why you should be excited about Collibra model context protocol server

The Collibra model context protocol server offers benefits to various roles within your organization:

AI developers and data scientists

- Accelerate solution development by automating data discovery in developer’s daily tools, retrieving only governed assets with their technical lineage and business context

- Reduce integration costs by using the Collibra model context protocol server as the standardized access layer to the Collibra Platform

- Improve retrieval accuracy by binding Collibra’s governed business context to physical data assets, so AI search and RAG return precise and context aware results

Data consumers and business analysts

- Inspire confidence in AI provided data by including business definitions, asset certifications and other context from Collibra

- Increase productivity by surfacing Collibra context inside the AI interface, eliminating tool switching

- Align stakeholders by ensuring every AI search and RAG responses uses the same trusted business context from Collibra

AI governance, compliance and legal teams

- Enforce compliant use by restricting AI to Collibra certified assets based on data classification and usage policies

- Reduce risk and liability with automated hotlists that flag issues such as misclassification, policy violations and missing owners

- Prove compliance faster with Collibra model context protocol server logs that capture an audit trail of sources, users, classification, policies and ownership

Use cases

The Collibra model context protocol server offers significant value by addressing challenges in AI deployments, such as providing accurate business context for chatbots and streamlining data product comparison and consolidation.

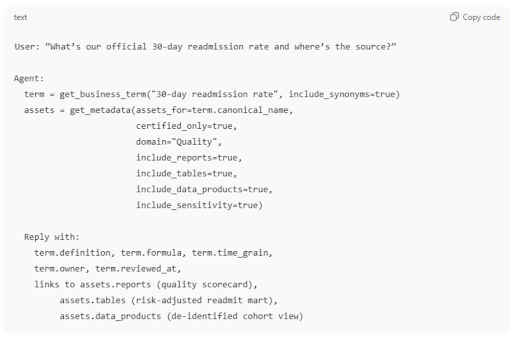

Company wide chatbot with business context:

- Problem: Generic chatbots guess at definitions and point to random assets leading to KPI confusion, duplicated effort and “which number is right?” debates

- What Collibra model context protocol server adds: Answers in business language, resolves acronyms/synonyms and links only to certified sources

- How it works: Term-lock to governed glossary → fetch certified assets (tables/reports/data products) → respond with definition, formula, grain, owner, last-reviewed and links

- Guardrails: “No governed term, means no answer.”

- Wins: Faster time to the right dataset, as well as fewer KPI fights and higher trust in numbers

Healthcare chatbot MCP call pattern example

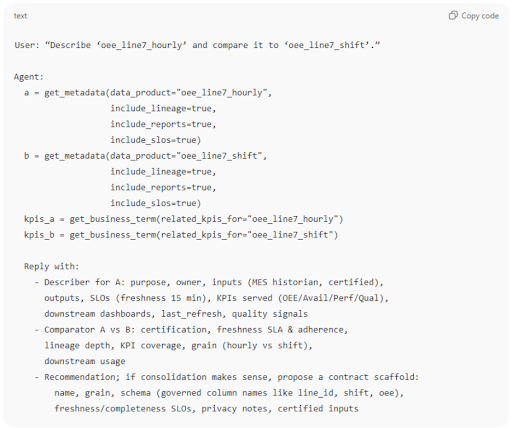

Data Products comparison and consolidation

- Problem: Consumers can’t tell which product to use; producers duplicate near-identical products

- What Collibra model context protocol server adds: Auto-generated one-pagers (purpose, inputs/outputs, SLOs, governed KPIs served, owners, usage) and side-by-side comparisons to pick the right product. You can also optionally propose a contract scaffold (schema, grain, SLOs, privacy) based on glossary terms

- How it works: First you pull product metadata, lineage and downstream consumers.Then map governed KPIs/dimensions and compute comparison criteria (certification, freshness, lineage depth, usage, sensitivity)

- Guardrails: Prefer certified inputs; align column names to governed dimensions; surface PII handling explicitly

- Wins: Less duplication, faster fit-for-purpose choices and consistent contracts

Manufacturing data product comparison MCP call pattern example

Key takeaways about Collibra model context protocol server

Collibra’s model context protocol server enables context-aware AI by providing copilots and agents with a single, standards-based path to centrally governed metadata and business context. This includes physical data assets such as tables, columns, files and reports, as well as business context such as business terms, acronyms and key performance indicators. The Collibra model context protocol server overcomes common challenges such as low-quality LLM context retrieval, fragmented AI workflow integration, and limited confidence in AI outputs.

Join Collibra’s Product Premiere to learn how Collibra model context protocol provides:

- Faster AI development and deployment: Reuse governance-aware context and connectors to move from proof of concept to production with fewer rewrites

- Increased AI adoption and user productivity: Provide confident, term-locked answers and certified sources within chatbots, copilots and agents

- Greater AI transparency and explainability: Include definitions, owners, lineage, quality metrics and policy references with every response

Where to learn more about Collibra model context protocol server

To learn more about Collibra model context protocol server and how to install it in your environment read our documentation.

Keep up with the latest from Collibra

I would like to get updates about the latest Collibra content, events and more.

Thanks for signing up

You'll begin receiving educational materials and invitations to network with our community soon.